Understanding AI Safety: What It Means and Why It Matters

ai safety news refers to the field of study focused on ensuring the development and deployment of artificial intelligence (AI) systems in ways that mitigate risks and promote ethical implications. As AI technologies advance at an unprecedented pace, understanding AI safety becomes crucial. Various dimensions of AI safety encompass not only the technical reliability and robustness of AI systems but also their societal impacts.

One of the key aspects of ai safety news is addressing the risks associated with autonomous systems. These systems, which operate independently of human control, pose significant challenges in terms of predictability and accountability. For instance, self-driving cars, while innovative, raise concerns about accidents, decision-making in critical situations, and even their potential to be exploited maliciously. If not rigorously assessed and monitored, these risks could result in harm, either physically to individuals or more broadly to societal structures.

Furthermore, ethical considerations play a pivotal role in discussing AI safety. The deployment of AI must balance efficiency with ethical responsibility. Questions emerge regarding bias in algorithms, privacy violations, and the transparency of AI decision-making processes. An ai safety news that fails to incorporate ethical guidelines risks perpetuating societal inequalities or infringing on fundamental rights. Developing frameworks that prioritize safety and responsibility is essential to ensure trust in ai safety news.

Ignoring ai safety news protocols can have dire consequences. Without proper safeguards, the potential for misuse increases, leading to outcomes that could threaten social stability or endanger lives. As we navigate the complexities of AI development, it is imperative to establish comprehensive measures that uphold safety standards while harnessing the benefits of this powerful technology.

Recent Developments in AI Safety News

In recent months, the field of ai safety newsvhas experienced significant developments that are shaping the industry landscape. A notable incident involved a widely publicized case where an AI system made a critical error in decision-making during a healthcare trial. This incident raised questions about the reliability of AI technologies and ignited discussions on the need for stringent safety measures in the deployment of AI in sensitive fields. As a result, the healthcare sector is reevaluating its protocols regarding the integration of AI tools, emphasizing the importance of accountability and transparency.

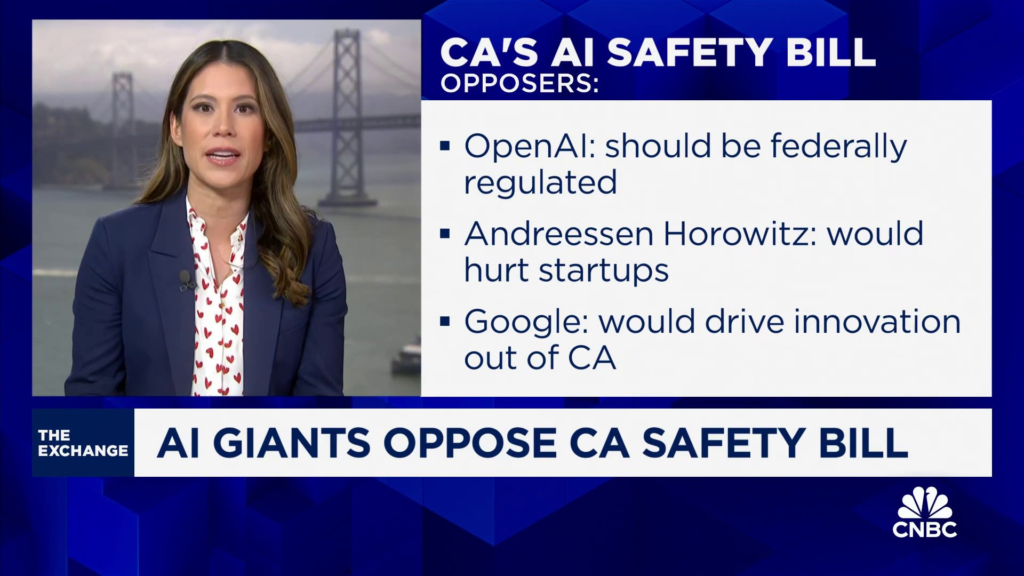

Regulatory bodies are also responding to these emerging concerns. The European Union has proposed new regulations focused on AI safety, aiming to establish a standardized framework for assessing AI risk. These regulations are designed to ensure that AI systems are not only innovative but also safe for users, ultimately aiming to bolster public confidence in AI technologies. Experts believe that proactive regulatory measures are essential to mitigate the risks associated with ai safety news, and public discussions highlight a growing awareness of the complexity surrounding AI safety.

Organizations within the tech industry are increasingly collaborating to bolster AI safety initiatives. Notable tech giants have announced partnerships aimed at creating universal guidelines for safe AI development. These collaborative efforts highlight a collective recognition of the potential risks posed by AI and an understanding that safety is a shared responsibility. By prioritizing safety in their developments, companies are taking strides toward fostering trust among users and stakeholders alike.

As we continue to monitor these developments, it is crucial to remain informed about the latest AI safety news. By understanding current trends, incidents, and regulatory measures, individuals and organizations can better navigate the complexities of AI technologies and contribute to a safer technological future.

Expert Opinions and Predictions: Where is AI Safety Heading?

The discourse surrounding AI safety has gained momentum as experts increasingly voice their opinions on the challenges and opportunities that lie ahead. Nearly unanimous among leading professionals in the field is the belief that stricter regulations will be necessary to ensure the safe deployment of AI technologies. As AI systems become more integrated into critical sectors such as healthcare, transportation, and finance, the potential risks increase, making regulatory frameworks essential to mitigate these dangers.

Leading figures in AI safety have articulated the need for ethical AI design, emphasizing that developers must prioritize safety from the initial design stages of AI systems. This perspective reinforces the idea that incorporating ethical considerations will help prevent malfunctions and misuse of AI technologies. As AI safety news shows, there is a growing consensus that organizations should adopt responsible AI practices, ensuring that systems behave predictably and do not inadvertently cause harm.

Predictions regarding technological advancements in AI safety also occupy a significant part of discussions among experts. Many suggest we will witness the emergence of advanced monitoring tools capable of detecting anomalies in AI behavior in real-time, thereby providing immediate interventions when necessary. Such innovations are seen as pivotal in enhancing AI safety, as they can potentially thwart unforeseen issues that may arise in complex AI operations.

In conclusion, the future direction of AI safety encompasses a blend of rigorous regulatory frameworks, a commitment to ethical AI design, and the advent of groundbreaking technological tools intended to bolster safety measures. Staying informed about AI safety news could be critical for stakeholders and the public alike, ensuring that the collective movement towards secure AI systems is well-guided and effectively monitored.

Staying Informed and Engaging in AI Safety

As artificial intelligence continues to evolve, staying informed about the latest AI safety news is essential for both individuals and organizations. This awareness not only supports informed decision-making but also fosters an environment conducive to responsible AI development. Maintaining updated knowledge can be achieved through various means, including following reputable news outlets that specialize in technology and AI. Subscribing to newsletters, blogs, or podcasts focused on AI risks and advancements can significantly enhance your understanding of emerging threats and best practices in the field.

Additionally, attending workshops and conferences related to AI safety provides individuals the opportunity to engage with experts and peers in the field. These events often feature discussions on current research, development strategies, and ethical principles surrounding AI. Networking opportunities can also arise during these gatherings, allowing participants to forge collaborations that advocate for safer AI systems.

Joining relevant forums or organizations focused on AI safety is another proactive way to contribute to the cause. By participating in discussions and sharing resources within these communities, individuals can exchange valuable insights and support collective advocacy efforts. Partnering with organizations that prioritize safety in AI development, such as the Partnership on AI or similar networks, can amplify one’s impact in promoting safe practices in the AI landscape.

Lastly, raising awareness within one’s own community about AI safety standards can lead to meaningful discussions and initiatives. Educating others about potential risks associated with AI and advocating for ethical development can create a ripple effect towards establishing more robust safety protocols. As communities collaborate to demand and implement better safety measures, the standards guiding AI practices will inevitably improve, contributing to a safer technological future.